Fig. 7: Synthetic Data Generation

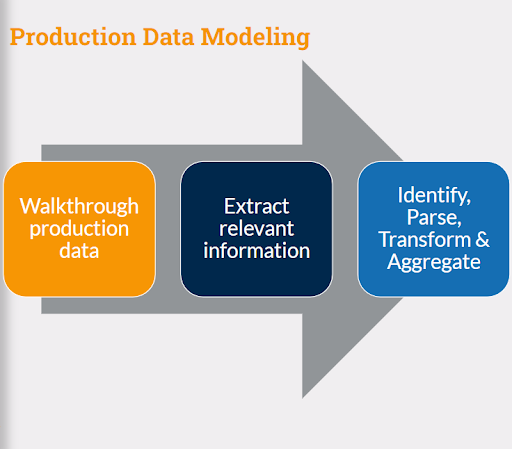

Now that we have a model in place, we want to generate data mimicking production for a new feature release, for example, to move data to a different storage model. The requirements also mention that since this model is offering storage at a very cheap price, it is expected clients will store more data here and it will need to be tested with 2x production load.

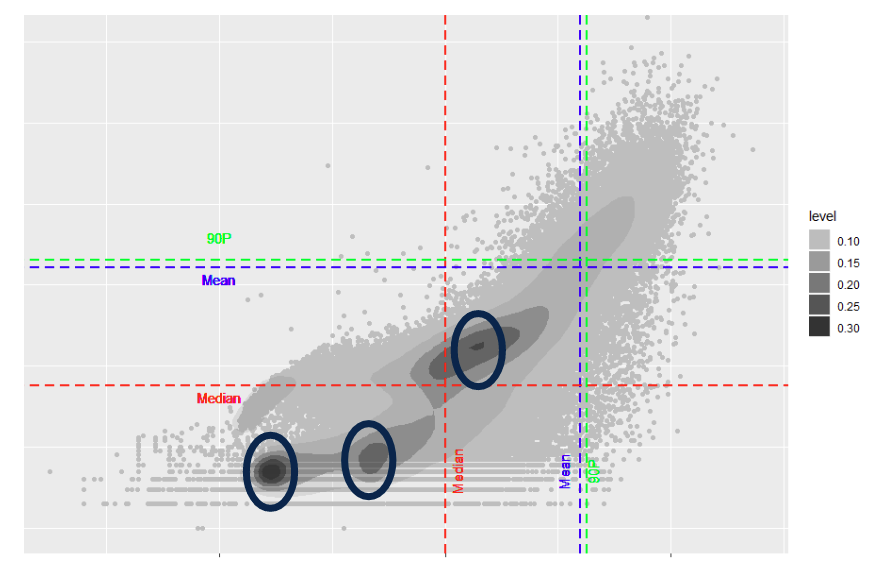

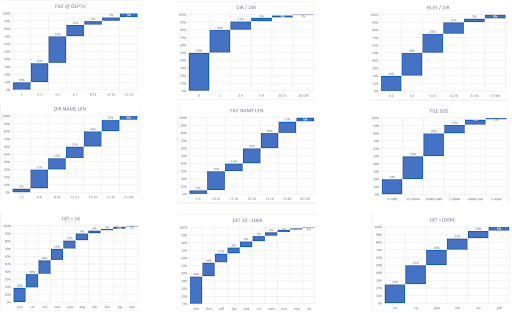

You start with deciding the size of the target filesystem, this is the input to the code

The code divides the total size into files of different sizes fitting the distribution

Depending on the total number of files, the directory distribution is defined, that is total directories at each level

Files are distributed in these directories

File names are generated

Extensions are mapped to the files

At each step, arrays are validated. If validations fail the process repeats itself and modifies the input model.

Finally, the best-fit arrays are chosen to be passed to the next step i.e. data generation.

These are a few algorithms that were explored and applied for data generation.

Text generation

Random text

This is a very simple and fast approach — random text is generated from ASCII characters. The disadvantage is that the data is garbled — it doesn't make sense and isn’t meaningful words you can generate like the examples in bold. The advantage is you can very accurately control the size of data that you need (useful for generating file names).

Obfuscated using Caeser's cipher

This is also simple and fast, however, you will need a corpus set, i.e. a selection of words of different lengths. If you want a word with three characters and from your bag of words you select "MAN" and shift each character by three, then MAN becomes P-D-Q; ZEBRA becomes E-J-G-W-F with a shift of five. This way you weakly protect the original corpus as it is very easy to guess the original word even if you don't know the shift for a limited vocabulary. However, if your corpus is public data, you need not spend time even applying the cipher, simply select the words from the bag which match the size.

Statistical model

If you have a large corpus of words, you can parse it and save the distribution with probabilities. Example:

Prediction:

1st Character: T (100% Probability)

2nd Character: H (100% Probability)

3rd Character: E (33% Probability) or I (67% Probability)

4th Character: - (33%) or N (33%) or S (33%)

Possible predictions: THE, THI, THEN, THIN, THES, THIS

Some of the predictions (highlighted in bold) could be not from the corpus and may be non-dictionary words like “THES.”

Markov chain

The last algorithm implemented is similar to the previous one, with the exception that if the third character is selected as I, there will be a fourth character which will be N or S with 50% probability. Again the implementation is based on words, not characters.

Also, there are three variations of the algorithm — Unigram is a one-word sequence; bi-gram is a two-word sequence; trigram is a three-word sequence. Unigram does not depend on the previous word. For example, “statistics” is a unigram (n = 1), “machine learning” is a bigram (n = 2), and “natural language processing” is a trigram (n = 3). If the combination selected doesn’t match any known pattern, you select random words. The same approach is used to initialize the chain. These algorithms are for textual matters, like notepad files or file or directory names.

For binary data, i.e. files like videos, images or .pdf

Random bytes are generated and packed into the file of a desired size and compression is controlled by selecting the range of random numbers — it is a simple but really effective solution. Range and compression have an inverse relationship, i.e. the higher the range, the lesser compression ratio you get.

Conclusion

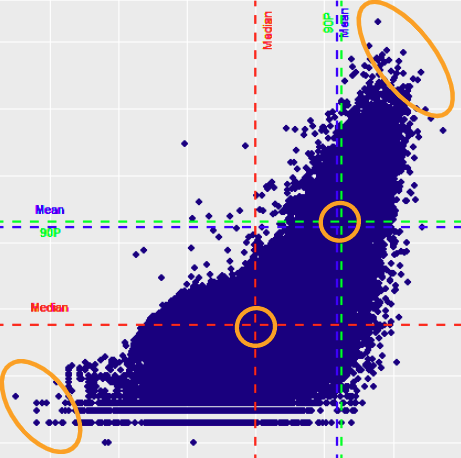

In order to have production-like test data, relevant characteristics that impact product behavior were identified. These were analyzed from production and modeled. The model was then used to generate test data for lower environments. The data sets were used in feature testing, scale testing, and performance testing.

Based on the use case in different industries and problems, similar characterization of production workloads can be executed to better test data and generated based on production data.

Next Steps

Learn more about the technical innovations and best practices powering cloud backup and data management on the Innovation Series section of Druva’s blog archive.