Principles of XAI

The four principles of explainable AI are:

Explanation

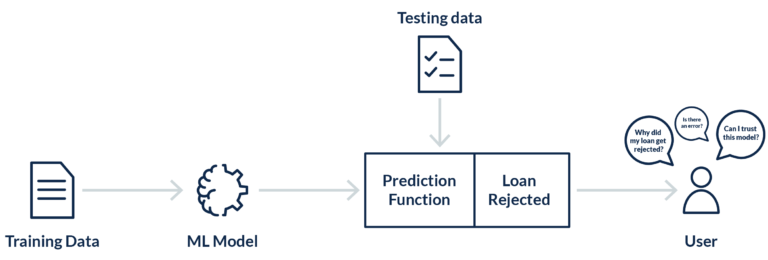

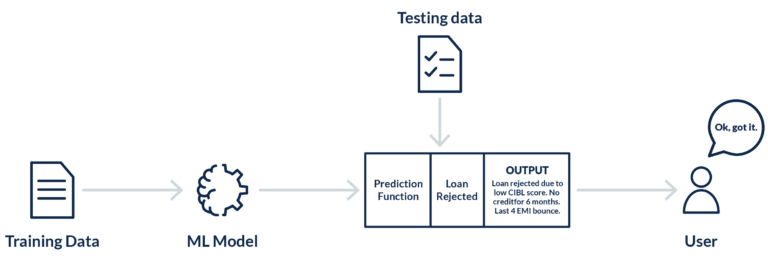

An AI system should be capable of explaining its output, with evidence to support the explanation. The type of explanation that a model provides depends on the end-users of the system, and include:

- Explanations that are designed to benefit end-users by informing them about outputs. An example is a model that processes loan applications and provides reasons why the model approved or denied a loan.

- Explanations designed to gain trust and acceptance in society. The loan application example may fall into this category if the descriptions are detailed enough to help the users understand why certain decisions were made, even if the findings are not what the user wants (e.g. denial of a loan application).

Meaningful

An AI system explanation is meaningful to a user if it understands the explanation given by the model. Users can grasp the reasons provided by systems. If the recipient understands the model’s explanations, the model satisfies this principle.

This principle does not infer that there is a one-size-fits-all answer. For a model, various explanations may be required for different groups of users. The meaningful principle helps explanations to be customized according to each user group.

Explanation accuracy

An AI system should be able to describe how it arrived at its decision output. This principle focuses on explanation accuracy, which is not the same as decision accuracy — one doesn’t necessarily imply the other.

Knowledge limits

An AI system should operate within its knowledge limits. This is to prevent inaccurate outcomes that may arise when the ML model is outside of its boundaries.

To satisfy this principle, a system should identify (and declare) its knowledge limits. This helps maintain trust in the system’s outputs and reduces the risk of misleading or incorrect decisions. Consider a system built to classify fish species — as part of an aquaculture system. For instance, let’s assume the model is provided with some debris (dog’s image). The ML model should indicate that it did not identify any fish rather than producing misleading identification.

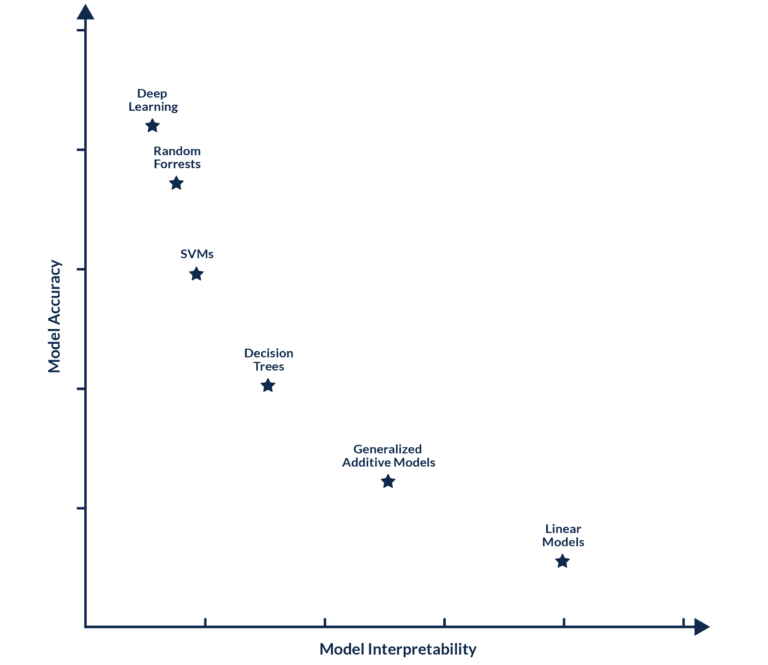

To achieve the principles of XAI emerged a suite of ML techniques that produce models offering an acceptable trade-off between explainability and predictive utility, and enabling humans to understand, trust, and manage the emerging generations of AI models. Among the emerging techniques, two frameworks have been widely recognized as the state-of-the-art in XAI:

- The Lime framework, introduced by (Ribeiro et al., 2016)

- SHAP (Shapley Additive Explanation) values, introduced by (Lundberg and Lee, 2017)

Lime and SHAP are surrogate model techniques to open black box machine learning models. The mechanism that these surrogate models follow is, instead of understanding how an AI model has given a particular score to all the data points, it tries to understand the variable contribution in that particular data point based on which the model gave that particular score. It tries to locally interpret with respect to a single data point rather than the whole AI model. They tweak the input slightly and test the changes in prediction. This change has to be small so that it still matches closely with the original data point.

The advantages of Lime and SHAP are that they have the ability to simplify complex models like random forests, etc., by giving a score to each data point and breaking down the contributions of each variable. The approach that these surrogate models use is based on approximation.

Conclusion

Explainable AI is a new and growing approach that aids users/businesses in comprehending the consequences and decisions that their AI technology suggests. With the constant advancements and applications of new technologies, adapting to and understanding these changes is essential. Many sectors will require explainable AI to comprehend AI and ML systems’ insights, forecasts, and predictions. Now is an ideal time to embrace and seize the opportunities of explainable AI.

Looking to learn more about the intricacies of AI and ML, and similar cutting-edge applications for cloud backup and data protection? Visit the Innovation Series section of Druva’s blog archive.