The Gartner Report (here) says storage data de-duplication and virtualization are two main technologies driving innovation in storage management software this year. This makes sense, considering the fact that corporate data is increasing at a whooping 60% annual rate. (Microsoft Report says here).

Server Backup

Data is very rarely common between production servers of different types. Its not difficult to imagine that Exchange email server may not have same content as Oracle database server. But data is largely duplicate within file-servers, exchange server and say a bunch of ERP servers (development and test). This duplication creates potential bottlenecks for bandwidth and storage used for backup.

Existing players have offered two solutions to this problem –

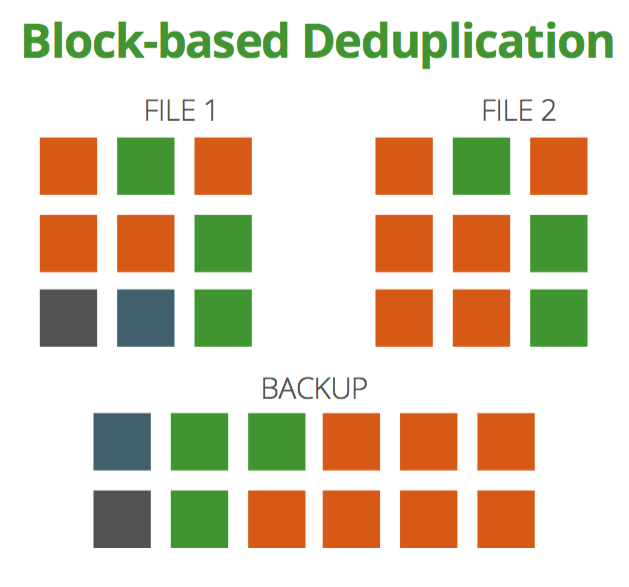

- Traditional single-instancing at backup server to filter out common content e.g Microsoft Single Instance Service (in Data center edition). This saves the just storage cost, depending upon at what level to filter commonalities – file / block / byte. A big player in this space is Data-Domain. These solutions don’t have a client component, they just save storage space.

- New innovative solutions like Avamar (now with EMC) and PureDisk (now with Veritas) which try filter content at backup server level before the data goes to the (remote) store. This makes these solutions much better suited for remote-office backups. They save bandwidth and storage.

But, there are two unsolved problems with both these approaches as well ( Which also, explains a poor response for these products in the market )-

- most of the times simple block checksum matching fails to figure out common data, as it may not fall on block boundaries . Eg. if you insert a simple byte in a file, the whole file changes and all the blocks shift. And the block checksum approach fails.

- Checksum calculation is very costly and makes backups CPU exhaustive.

- These approaches are targeting storage cost, not time/bandwidth which is more critical.

PC Backups

The problem is much more complex at PC level, as duplicated data is distributed among users and is as high as 90% in some cases. Emails / documents and similar file formats create large pool of duplicate data between users.